The Aotearoa AI Summit 2025 offered a packed snapshot of how New Zealand leaders and organizations are positioning themselves with AI and what needs to happen to prepare for the future. The room in Auckland on September 18, 2025 was filled with adopters and enthusiasts immersed in AI like I am, and the presentations gave a wide sampling of what has been happening in New Zealand. Below are some of the things that I found interesting and some of the issues people raised that we need to consider as this tech seemingly hurtles forward at breakneck pace into our lives and work.

Megan Tapsell (GM Enterprise and Pacific Technology, ANZ; Outgoing Chair, AI Forum NZ) opened by showing stunning videos of Māori that had been AI-generated with careful concern for cultural appropriateness and considerations. Regarding the future, she said: “This is not about the tech; it’s about what we choose to do with it, when we put AI in our hands.” She passed on the mic to incoming AI Forum Chair Dr. Mahsa McCauley who has championed ethical use of AI technologies and education through her roles as Associate Professor at AUT and founder of She Sharp.

Ministerial Address: An update on New Zealand’s AI Strategy 2025 – Dr. Shane Reti

Dr. Shane Reti (Minister of Science, Innovation and Technology, NZ Government) gave an update on New Zealand’s AI Strategy 2025 and announced a doubling of AI R&D funding to $70 million over seven years through the New Zealand Institute for Advanced Technology. He said we need to bolster public confidence in AI, since surveys show trust is low, and that NZ has advantages in data center infrastructure it could leverage. Digital twins are on the agenda for funding as well.

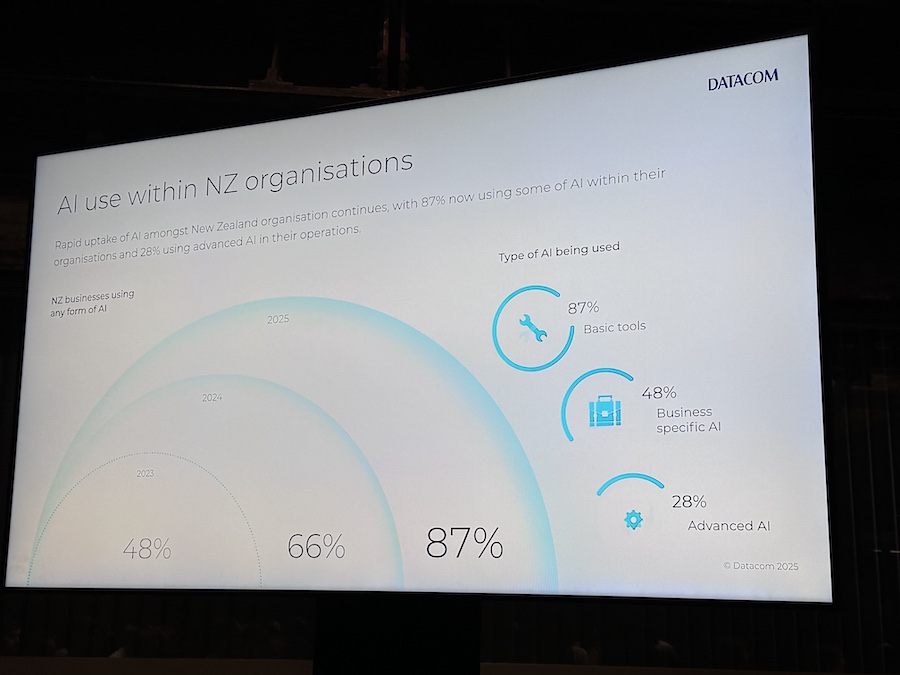

AI in Aotearoa: Shaping our future with purpose and pace – Greg Davidson

Greg Davidson (Group CEO, Datacom Group) said the four areas many organizations are starting with in their AI journey are 1. Customer service, 2. Software development, 3. Sales and marketing / SEO, and 4. Document processing. He mentioned a Stanford study showing the impact of AI already making a dent in the workforce based on payroll data, and that it’s not a two year out thing but happening now. The traditional software development lifecycle has been turned on its head. One use case is how farmers are using conversational AI to get answers to complex questions, and the AI knows when to pass off a task to a human. Another use case is in local government, where AI can process information for consent applications. A third use case is an AI-powered payroll assistant with guardrails.

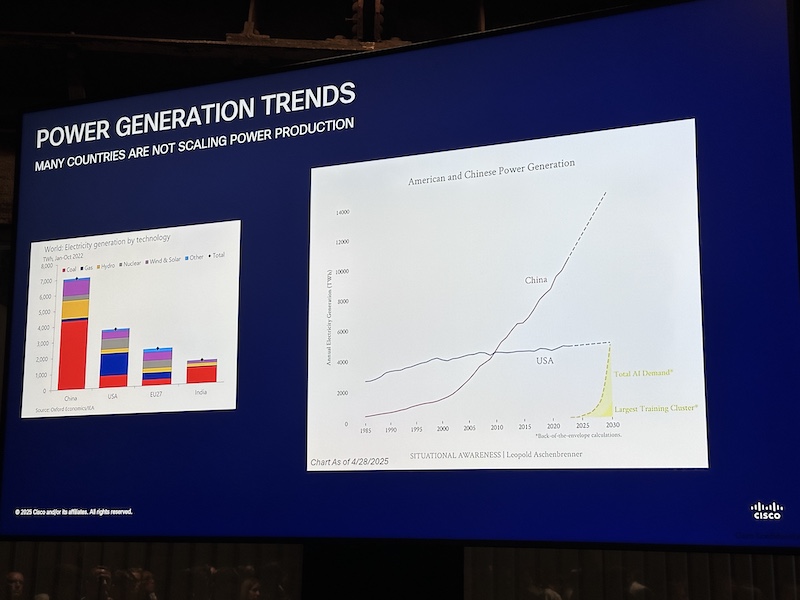

He discussed how infrastructure is a looming issue with the rise in AI. Export restrictions and limitations on NVIDIA products have led to power shortages in the US and Australia. As a country, NZ needs more investment in fiber cabling which can be fragile, and more power because processing AI tasks takes more power. He challenged us to consider what does self-determination look like in this new world? Is it control over data? Confidence that AI will provide correct information when asked? For businesses and organizations to benefit from AI, they need reliable processing and connectivity. It’s not just about adoption; infrastructure must be considered for NZ to stay competitive among bigger countries. He explored the idea that organizations need to know when to move from a conversation about productivity to one about an engineering problem, and need to build a framework for measuring success with AI projects.

AI for Aotearoa – A Unique Path Forward – Dave Siroky

Dave Siroky (CTO – AI, Cisco) also discussed the infrastructure issue, noting there was massive investment in AI data centers but that power production was not scaling in top countries and that export controls out of the US are a factor at play. Reasoning models currently take about 20 times more tokens and take more compute. Plus there’s the challenge of latency and data movement – you can’t move large amounts of data to and from farther away GPUs in data centers easily, e.g. healthcare images. There also aren’t many GPUs available from hyperscalers in or near the Australia/NZ region. The shift possible with AI is to move from navigating menu dialog boxes to talking to software, to have an AI observability dashboard to check usage, to have an AI defence dashboard for security and guardrails, etc.

Panel on Data Centres and Sovereign AI – Dave Siroky, Graeme Muller, Amy Dove, and Elle Archer

Dave Siroky was then joined by others including Graeme Muller (Chief Executive, NZTech Group), Amy Dove (Partner, Deloitte), and Elle Archer (Chair, Te Hapori Matihiko) to discuss the concept of sovereign AI and what it could mean for New Zealand.

The question is how does NZ build its own sovereign AI capability? There’s an opportunity to fine-tune an OpenAI model on a NZ data set if people put in the work to curate high-quality data from NZ. People need to consider what values and culture they want, and what kind of AI they want for their organization or country. The care and curation of the data is key, but so is the infrastructure to support the use of that data for AI purposes. Communities can be asked what they want.

Quit Doomscrolling: Real AI Wins You Won’t See on Your LinkedIn Feed – Dave Howden

Dave Howden (CEO and Co-founder, Supahuman) talked about how lots of organizations have staff doing things humans weren’t meant to do, e.g. tedious tasks like going through lots of documents. He raised the idea of a possibilitarian, defined as “someone who actively seeks and believes in the potential for good and positive outcomes in any situation, even amidst challenges and darkness”.

He offered three case studies of using AI to reduce tedious work and enable people to spend more time on higher-value tasks. At Marine and Specialised Technologies Academy (MAST), which is a Private Training Establishment (PTE), tasks include making assessments and compliance. Their goal is to help people learn about boatmaking, but they have 400 unit standards from NZQA to work with. It used to take weeks for their technical writer to develop materials to support these, and they now have an AI trained on relevant information to significantly speed up this task, still having the work be reviewed by humans. At Soil and Rock Consultants, they have to write geotechnical reports for council. AI is helping them spend more time on site visits and engineering analysis and less time on report admin. At Jani-King commercial cleaning, they sped up their sales team’s ability to gather leads from 15 minutes to 90 seconds using AI. Howden called it a leadership moment to want to reduce tedious tasks in an organization and free people up for higher-value work.

Panel on Exploring AI Productivity and Innovation in the AEC Sector: Opportunities, Outcomes, and Future Directions – Pamela Bell, Ali Hamlin-Paenga, Hema Sridhar, moderator Maria Mingallon

Moderator Maria Mingallon (Knowledge & Information Manager and AI Lead, Mott MacDonald) led the conversation with Pamela Bell (Chief Everything Officer, Building Institute Aotearoa), Ali Hamlin-Paenga (CEO, Te Matapihi), and Hema Sridhar (Deputy Director, Koi Tū Centre for Informed Futures) about the opportunities for AI to address issues in the Architecture, Engineering, and Construction (AEC) sector.

Hema Sridhar asked what will our city look like in 10-20 years, will urban spaces have space for nature, how do we allow for birds and other wildlife to thrive in the middle of the city? When there are wicked problems, AI can help address them. New Zealand needs to look beyond a 3-year political cycle, but has not been particularly good at foresight as a nation. People need to be less reactive in policy making and more proactive in taking action.

Pamela Bell gave the perspective from construction and how there’s lots of complexity and compliance but AI can help and is being used for tendering and procurement, HR, and compliance tasks. Construction contributes 40% of landfill waste so improving efficiency can have a big impact. However, there’s an inequity with big companies having had LLMs for years and being ahead of the 90% SMEs in NZ.

Ali Hamlin-Paenga said that governments come and go but as a Māori housing provider they are always there. They have a new digital intelligence with an indigenous lens launching internationally soon. They also want to use gamification so young people are prepared to want to use technology to address issues such as homelessness. She emphasized that we need to have a capable workforce and take action when needed. If there’s no policy, create it. If there’s no decision-maker, do it. She said we need to get out of the way of our young people, while using older generations’ wisdom and guidance. There are opportunities, for example, to use AI to help improve processes in the Māori Land Court space and in the way providers interact with local councils.

Panel: What’s next in AI? Convergence of AI with other emerging technology – Michael Witbrock, Ming Cheuk, Matt Bain, moderator Hema Sridhar

Moderator Hema Sridhar (Deputy Director, Koi Tū Centre for Informed Futures) led the panel of Michael Witbrock (Professor, University of Auckland), Ming Cheuk (Chief Technical Officer, ElementX) and Matt Bain (Director of AI and Marketing, Spark) discussing how AI is progressing, the role of universities going forward, people’s capabilities with AI, and productivity issues.

They explored the potential in AI technologies and the challenge for organizations to think further ahead into the future about how society might change. AI can enable lots of technologies because it has few constraints, such as being able to make things smaller than a human cell and making more advanced robotics. Things to consider are how can we accelerate the rate at which teams can adopt, how do we develop capabilities at scale, how do we think a lot further ahead about how customers will interact with our products, how do we think about business when business models are built for slow change, and how do we put in place affordances for what are more likely scenarios, rather than focusing on specific risks. And AI is not just a software engineering team issue but a whole of organization one. Plus, cross-disciplinary teams get better results.

It’s hard to imagine how universities can keep up with the pace of change. What are universities for, is the next question. If they’re not for getting skills for jobs, are they going to go back to their earlier purpose of being a place where people learn about themselves and their culture, and if so, how can we lean in more to that purpose. Jobs are changing and in 6 months to 3 years, many jobs may not exist as they are today as AI changes them.

They covered the topic of New Zealand’s historic productivity issue and population size. Some thought there was now no excuse for being behind because New Zealand has small businesses that should be able to move faster and prior constraints don’t matter in the same way with AI. For example, New Zealand could be a $100-million economy even with only 5 million people. If AI is the next big productivity leap, how can New Zealand take advantage of it and embrace a more risk-taking culture like the US.

Regarding the $70 million fund for AI that was announced earlier, how could this be an opportunity for people and organizations to contribute to achieve rapid national change, rather than just thinking about how it will help them at an individual level.

Panel: Designing AI that puts people first – Dr Amanda Williamson, Pedro Ramirez, James Lawrence

In this panel, Dr Amanda Williamson (Director – AI Institute, Deloitte), Pedro Ramirez (Digital Strategy Practitioner – AI Work Programmes, Department of Internal Affairs), and James Lawrence (GM – AI Centre of Enablement, Fonterra) discussed the use and adoption of AI in their organizations and what young people should consider for their future pathway.

James Lawrence discussed how many staff are using Gen. AI at Fonterra and that once the training is in place, adoption is the next step. Policies, frameworks, and education were last year’s focus, as well as offering attractive alternatives to mainstream AI tools, so this might mean encouraging staff to use Copilot and custom GPTs. There is a need for both a formal baseline of training at the introductory level, plus leadership support in having conversations with their teams and showing relevant use cases in their context. CEO support should be leveraged so executive team members are pushing AI in their teams. Data and information management are also becoming more important. Regarding a question about how to measure value, he said sometimes simple is best and you can use the same metrics as years past such as ones that finance and accounting teams are comfortable with, as well as adoption metrics (users per month, or prompts per month). The better educated staff are, the higher quality their use cases for AI can become. He also noted that since most processes are about making a decision, we should ask whether we need to have an AI replicate each step of the process, or could we have an AI agent skip the middle bits and jump to making a decision. For the question about what should teens study in preparation for this future, he thought practical skills, soft skills, curiosity, critical thinking, and focusing on behavior of learning while having fun will be relevant.

Pedro Ramirez gave his perspective from the government side and said a survey of 70 organizations in the public service in 2024 vs. 2025 showed that usage has gone up and friction has gone down as the tech gets easier. The public service is educating staff about AI through a masterclass program with executives, as well as AI training that is practical and has participants work on a solution, not just learn about theory. He noted that NZ has an agility that other jurisdictions can only dream of. He thought the government should exemplify what safety and responsibility means when it comes to AI. Regarding a question about how are you designing for choice and the digital divide with AI products and services, he discussed how the conversation can be about enablement rather than substitution with AI, such as having your GP be able to pay attention to you instead of having to take notes staring at the computer screen. He noted the Public Service AI Framework has five principles (inclusive, sustainable development, human-centred values, transparency and explainability, safety and security, accountability) and that since trust is the currency of government, people should think about how we ethically engineer the next phase of AI.

Dr Amanda Williamson discussed the shift in that job roles are changing to have people now be moderators of AI output. She suggested we should be thinking about what could the next wave of capability mean for us, and how do we prepare for what’s next?

MC Julia Pahina (CEO & Co-Founder, Fibre Fale) followed up the panel with a note about that trust piece that had been discussed several times, challenging leaders to first demonstrate that they are a trustworthy visionary leader when it comes to AI if they want staff and the public to get on board.

Roundtable on AI and Education

I stepped in to co-facilitate a roundtable on AI and Education on the topic of how to create time and space for educators to upskill in AI literacy. My group had a good discussion about the challenges that educators across different levels face in finding spare time in their busy schedules to learn about this new technology and be able to see how it could help them. Some ideas were to help people surface good content and make bite-sized learning, raise the profile of existing communities of practice and champions, get leaders on board, emphasize the ‘why care aspect’, set aside time during existing staff meetings, and consider mentorship opportunities.

AI Hackathon Festival: Finalist Pitching Session and 2025 Awards

There were four pitches from the finalists from the AI Hackathons that had been held earlier in the year. Wilding was an AI tool that would use AI to spot sapling pines earlier so people could remove them while it only cost a few dollars, because when they get larger they cost $250 each to remove. Robin was an AI companion for seniors focused on health and wellbeing. Airie was an AI immigration planner that turned the process of looking at immigrating to a new country from a cold bureaucratic form into a conversation. Empathy was an AI that spotted challenges for young people early to help them with a personalized plan for their learning so they could take action. They were a team from South Auckland and won the People’s Choice Award!

Quick-fires: AI driven transformation within Aotearoa

Arash Tayebi (Kara.tech) covered an AI tool that translates text into American Sign Language (ASL) with New Zealand Sign Language (NZSL) planned next. He said he soon discovered it was an engineering and culture and history problem, not just a technical one, so it had more complexity to consider. They have the world’s largest dataset for ASL and he showed an example of the tool translating text into ASL.

Gavin Sharkey (Managing Director and Co-founder, Kōwhai AI) and Richard Wright (Head of Data and Analytics and AI Lead, Lotto NZ) discussed how AI could be used to aid in biodiversity and wildlife conservation efforts, such as in Wildlife.AI, in making a virtual fence more cheaply than a real one, and in addressing penguin losses due to the degradation of the Hauraki Gulf. Digital twins can be used in biodiversity efforts, such as to see what would happen if kiwi were placed in an area. Digital twins can also be used to simulate what might happen if changes were made in the lotto game or a jackpot, or to address gambling harm.

Professor Alexandra Andhov (Director, Centre for Advancing Law and Technology Responsibly, University of Auckland) closed out the presentations by discussing how corporations like Meta are building the equivalent of leaky buildings in the 1990s, but unlike with buildings, we’re sacrificing more of people’s health, children’s wellbeing, etc. She proposed thinking of regulation not as a noun but as a verb, something akin to what a lighthouse keeper would do to be watchful and look out for distress. Tech companies use NZ to test because it’s too small to be a problem if things go wrong. What we can do is make innovation here for our people, on our terms. She asked us to think about what if we had systems built around our rights and our culture, something to tell future generations about.